Facebook Apparently Terrified by Default Privacy

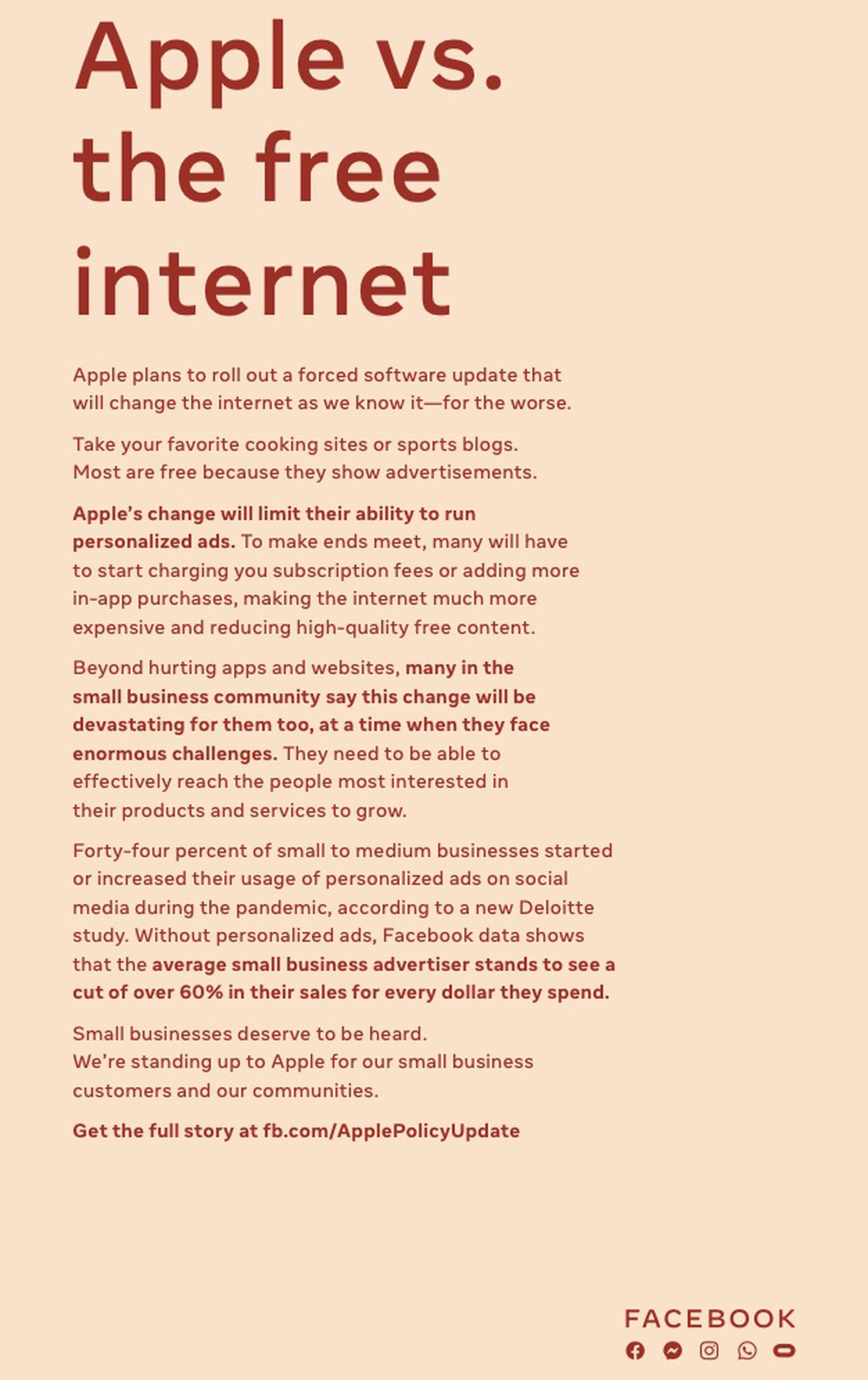

Based on their full-page advertisements in various newspapers, Facebook is extremely upset about Apple’s upcoming move to make cross-app privacy the default. Their two main “hooks” are that it will hurt small businesses, and make the internet less “free”. I believe that neither of these is the case.

What is clear to me is that this move will hurt Facebook’s ability to collect piles of information about its users, and to leverage that to collect huge amounts of advertising money.

The Current Situation

Before I go on, a caveat here: while I’m a programmer and a researcher, I am not a security and privacy researcher, so you should check the things I’m telling you.

With that said, a brief summary of the current situation: Currently, Apple’s iOS includes a notion of an “IDFA”, an “identity for advertisers.” This IDFA can be seen by apps, and apps can send information about what a user is doing in this app back to its central servers. This information can then be connected to other information uploaded by other apps in order to build a detailed picture of you.

This allows Facebook and others to understand what you’re like, and how best to separate you from your money by presenting you with advertising that’s likely to convince you to buy things. It also allows Facebook to identify characteristics that will allow them to present content that you will be really really interested in.

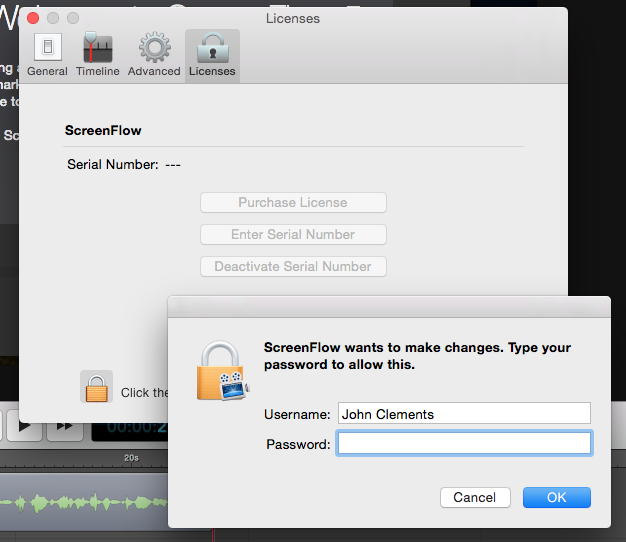

Back in June 2020, at their developer’s conference, Apple announced a change to the way that IDFAs would work. Since this is a developer conference, they were addressing app developers, and telling them how they would use their new framework to observe a user’s IDFA:

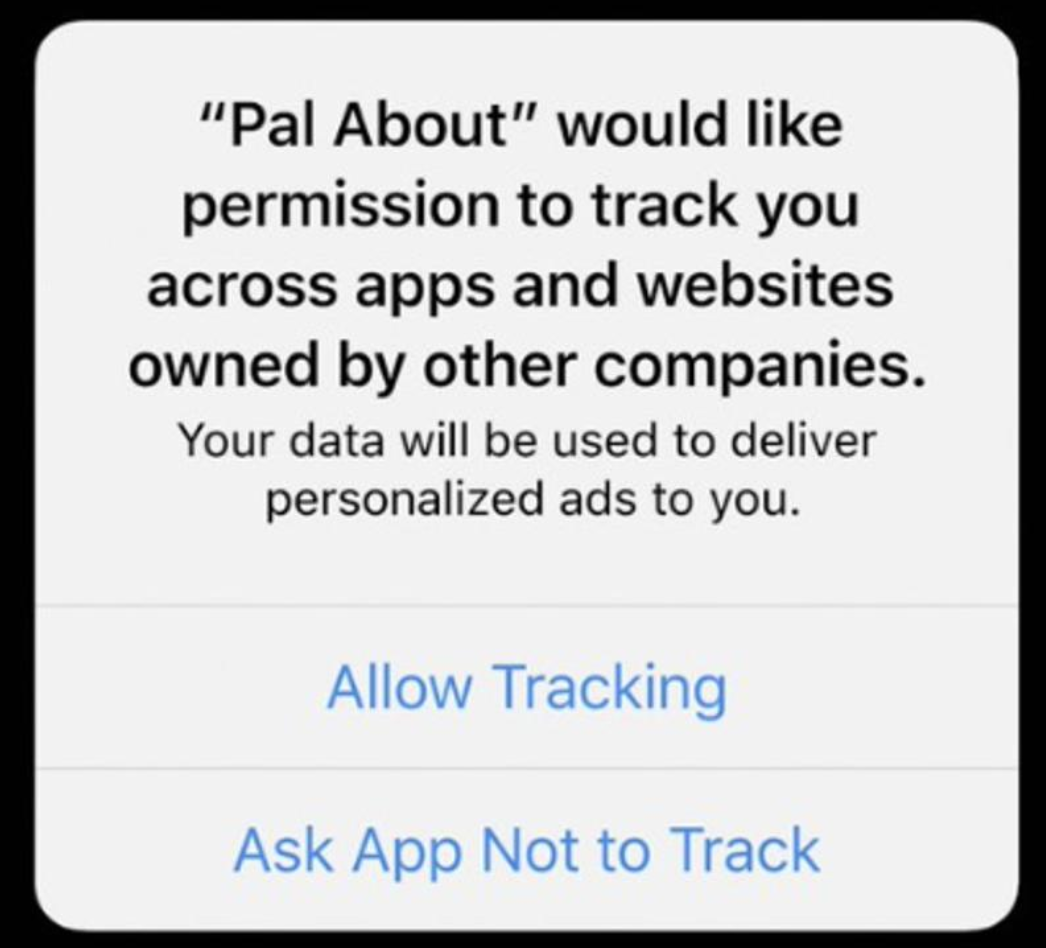

"To ask for permission to track a user, call the AppTrackingTransparency framework, which will result in a prompt that looks like this.

opt-in tracking dialog

This framework requires the NSUserTrackingUsageDescription key to be filled out in your Info.plist.

You should add a clear description of why you’re asking to track the user.

The IDFA is one of the identifiers that is controlled by the new tracking permission.

To request permission to track the user, call the AppTrackingTransparency framework. If users select “Ask App Not to Track,” the IDFA API will return all zeros."

So, which button would you click?

My guess is that relatively few people will click the “Allow Tracking” button.

This will mean that Facebook and other apps will not be able to observe your IDFA, and will not be able to use this extremely convenient way to gather and share information about you.

Small Businesses

What does this mean for small businesses?

Let me offer another caveat here, and tell you that I am not an expert on small businesses, their use of targeted advertising, and the effectiveness of their use of targeted advertising.

However, I’m going to observe that small businesses, by and large, are hurt by online advertising in general, and are unlikely to have the kinds of data science teams that are best positioned to sift through and profit from reams of consumer data.

Some (like Facebook?) might respond that their interfaces are designed to make high-quality data analytics available to even businesses that don’t have giant data science teams. I don’t think this argument holds much water, and I think that whenever Facebook provides better information on their users, companies with high data-science ability will be the ones best positioned to capitalize on it.

Facebook makes the specific claim that “Facebook data shows that the average small business owner stands to see a cut of over 60% in their sales for every dollar they spend.” This claim is (I claim) fairly preposterous; I’m guessing that they’re measuring the relative effectiveness of targeted and non-targeted advertising. What this ignores is the fact that people are still going to buy things, so that if targeted advertising goes away, more dollars will go toward non-targeted advertising.

Of course, they might be right, in the situation that the stuff that people are buying because of targeted advertising is worthless junk that they didn’t need. In that case, maybe targeted advertising is really important because it allows advertisers to sell us worthless junk. I’m not sure that’s a good argument for allowing targeted advertising.

The Free Internet

Next, Facebook makes the argument that this move is in opposition to the “free” internet. Specifically, they argue that “Many [sites] will have to start charging you subscription fees or adding more in-app purchases, making the internet much more expensive and reducing high-quality free content.”

This paragraph makes it clear that Facebook’s definition of “free” here is “free as in beer”, not “free as in speech”. That is, Apple’s change will not reduce the liberty of the internet. It will simply make it harder for sites to pay for their content-generation using targeted advertising.

Again, I think this argument doesn’t hold water, for the same reason given in the case of small businesses; unless the items being advertised are completely useless, shifting from targeted to untargeted advertising will simply level the advertising playing field, and make it harder for large companies to leverage their data science teams to separate you from your money.

However, a reduction in targeted advertising will make one huge difference; it will mean that small businesses will be more likely to work directly with the sites that their projected customers will use, rather than giving a huge slice of their money to middlemen like Facebook that mediate and control access to the targeted audiences. If I think that my customers read the Wall Street Journal, I will contact the Wall Street Journal and place an ad. This may sound like business as usual, but it’s absolutely terrifying to advertising middlemen whose current value is in holding all of the information about customers, and placing advertisements directly in the pages of those who are likely to click on them.

In Summary…

To summarize: tracking is still possible after this move; it’s just that users must opt into it, rather than having it on by default.

And here’s the thing. If you’re as terrified as this by the idea that users might have to explicitly consent to your practices… maybe you’re not actually the good guy.

Or, as That Mitchell and Webb Look put it: “Hans … are we the baddies?”

Further reading:

Mozilla campaign to thank Apple

Apple Developer Conference Video (with transcript)

Forbes article on the proposed change

EFF opinion on advertiser IDs (both Google’s & Apple’s)

facebook full-page ad