It’s hard to predict student scores

Good news, students. I can’t accurately predict your final grades based solely on your first two assignments, quizzes, and labs.

I tried, though…

First, I took the data from Winter 2015. I separated the students into a training set (70%) and a validation set (30%). I used ordinary least-squares approximation to learn a linear weighting of the scores on the first two labs, the first two assignments, and the first two quizzes. I then applied this weighting to the validation set, to see how accurate it was.

Short story: not accurate enough.

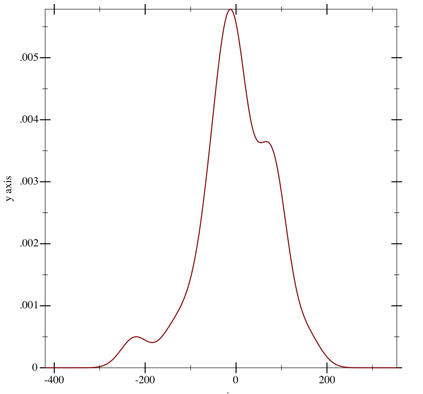

On the training set, the RMS error is 7.9% of the final grade, which is not great but might at least tell you whether you’re going to get an A or a C. Here’s a picture of the distribution of the errors on the training set:

distribution of errors on training set

The x axis is labeled in tenths of a percentage point. This is the density of the errors, so the y axis is somewhat unimportant.

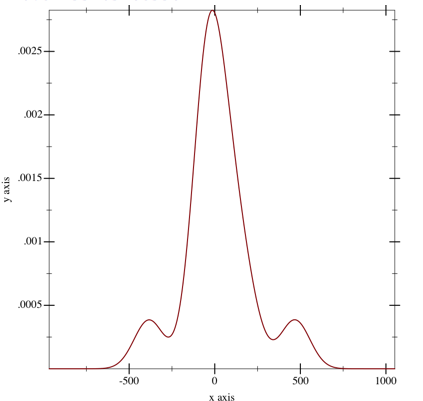

Unfortunately, on the validation set, things fell apart. Specifically, the RMS error was 19.1%, which is pretty terrible. Here’s the picture of the distribution of the errors:

distribution of errors on validation set

Ah well… I guess I’m going to have to grade the midterm.